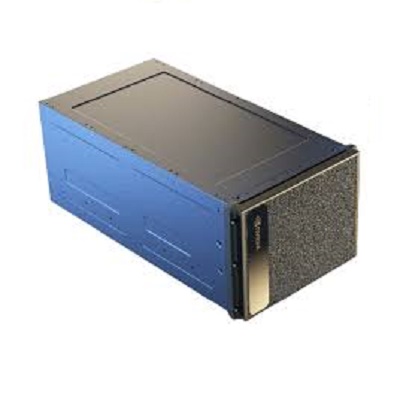

NVIDIA DGX H800 – 640GB SXM5 Power with 2TB RAM for AI at Scale

The NVIDIA DGX H800 640GB SXM5 2TB is engineered for high-impact AI deployments, offering unmatched compute power for enterprise-grade deep learning, LLM training, and generative AI workloads. Powered by eight H100 SXM5 GPUs with 640GB of GPU memory and backed by an enormous 2TB of system RAM, the DGX H800 is the ultimate solution for organizations scaling artificial intelligence in real-time across multiple environments.

This system represents the evolution of the DGX platform, purpose-built to deliver next-level training speed, model parallelism, and efficiency. Whether you are fine-tuning foundation models or building next-generation AI applications, the DGX H800 ensures you’re operating at peak computational efficiency.

Specifications

| Specification | Description |

|---|---|

| GPU | 8x NVIDIA H800 Tensor Core GPUs |

| GPU memory | 640GB total |

| Performance | 32 petaFLOPS FP8 |

| NVIDIA® NVSwitch* 4x | Yes |

| System power usage | 10.2KW max |

| CPU | Dual Intel® Xeon® Platinum 8480C Processors |

| – 112 Cores total | |

| – Base: 2.00 GHz | |

| – Max Boost: 3.80 GHz | |

| System memory | 2TB |

| Networking | – 4x OSFP ports serving 8x single-port NVIDIA |

| ConnectX-7 VPI | |

| > Up to 400Gb/s InfiniBand/Ethernet | |

| – 2x dual-port QSFP112 NVIDIA ConnectX-7 VPI | |

| > Up to 400Gb/s InfiniBand/Ethernet | |

| – 10Gb/s onboard NIC with RJ45 | |

| – 100Gb/s Ethernet NIC | |

| Management network | Host baseboard management controller (BMC) with RJ45 |

| Storage | – Internal storage: |

| – 2x 1.92TB NVMe M.2 | |

| – 8x 3.84TB NVMe U.2 | |

| Software | – NVIDIA AI Enterprise: Optimized AI software |

| – NVIDIA Base Command: Orchestration, scheduling, and cluster management | |

| – DGX OS / Ubuntu / Red Hat Enterprise Linux / Rocky: Operating System | |

| Support | Comes with 3-year business-standard hardware and software support |

| System weight | 287.6lbs (130.45kgs) |

| Packaged system weight | 376lbs (170.45kgs) |

| System dimensions | – Height: 14.0in (356mm) |

| – Width: 19.0in (482.2mm) | |

| – Length: 35.3in (897.1mm) | |

| Operating temperature range | 5-30°C (41-86°F) |

Ideal for Research, Data Centers, and AI Infrastructure

Designed for elite-level performance, the DGX H800 serves as the cornerstone of modern AI infrastructure. When compared with other top-tier solutions like the Supermicro A+ Server AS-8125GS-TNHR or the Quanta S7PH H100 GPU Server D74H-7U, the DGX H800 offers tighter hardware integration, NVIDIA-optimized software stack, and greater scalability for enterprise-wide AI initiatives.

If you’re exploring multi-GPU architectures, the NVIDIA HGX H100 Delta-Next 640GB SXM5 provides another powerful option for custom server builds, but the DGX H800 remains unmatched in plug-and-play AI supercomputing.

Future-Proof Your AI Stack with NVIDIA DGX H800

Whether you’re a research institute scaling LLM models or a business deploying AI-as-a-service, the DGX H800 provides the speed, memory, and bandwidth to keep you ahead. It eliminates bottlenecks, accelerates training times, and seamlessly integrates with NVIDIA’s enterprise software ecosystem. With 640GB of SXM5 GPU power and 2TB of system memory, it’s the server that defines the future of AI compute.

Now available on DeciMiners – tap into the next generation of AI performance with the NVIDIA DGX H800.

Angel (verified owner) –

Perfectly working machine.

Thea (verified owner) –

Perfectly working machine.

Rico (verified owner) –

Came in good shape.

Emilia (verified owner) –

Very fast delivery.

Giovanni (verified owner) –

The product is firmly packed.

Chiara (verified owner) –

Very well worth the money.

Angel (verified owner) –

Came in good shape.

Sven (verified owner) –

Very well worth the money.

Chiara (verified owner) –

Very well worth the money.

Vittorio (verified owner) –

Very fast delivery.

Emilia (verified owner) –

Perfectly working machine.

Elio (verified owner) –

Very well worth the money.

Finn (verified owner) –

Very well worth the money.

Greta (verified owner) –

Very well worth the money.

Greta (verified owner) –

Came in good shape.